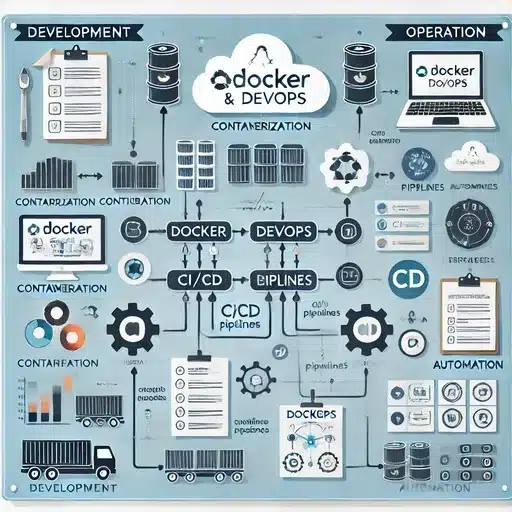

DevOps and Docker have become pivotal tools for modernizing workflows. While DevOps streamlines collaboration and processes, Docker revolutionizes how applications are packaged and deployed. In this article, we’ll explore their relationship, diving into their features, benefits, and why they’re critical for today’s tech-driven world.

Understanding DevOps

DevOps is a transformative methodology that bridges the gap between development and operations teams within an organization. By fostering a culture of collaboration and shared responsibility, DevOps aims to streamline the software development lifecycle, enabling faster, more reliable releases. This approach integrates continuous integration, continuous delivery, and automation tools to minimize manual errors, accelerate deployment times, and improve system stability.

Ultimately, DevOps enhances operational efficiency, promotes innovation, and helps businesses respond swiftly to changing market demands, ensuring high-quality software reaches users faster and with greater consistency.

Key Components of DevOps:

- Continuous Integration (CI): Developers regularly merge code changes into a shared repository.

- Continuous Deployment (CD): Automates the release of software updates.

- Infrastructure as Code (IaC): Managing infrastructure through code for consistency.

- Monitoring and Feedback: Tracking system performance to identify issues and improve processes.

What is Docker?

It is a powerful open-source containerization platform designed to streamline the development, testing, and deployment of applications. By packaging an application along with all its necessary dependencies, such as libraries, system tools, and configurations, into a lightweight, portable container, Docker ensures that the application runs consistently across different environments, whether on a developer’s local machine, a testing server, or a production cloud environment.

This container-based approach eliminates the “it works on my machine” problem, making software delivery more reliable and efficient. Docker also supports rapid scaling, easy version control, and simplified collaboration among development teams, making it an essential tool in modern DevOps practices.

Why It Matters:

- Portability: Containers run seamlessly across various environments, from local development to cloud servers.

- Efficiency: It containers share the host OS kernel, making them lightweight and faster than virtual machines.

- Scalability: It makes it easier to scale applications by deploying multiple container instances.

Role in DevOps

It is a cornerstone of the DevOps ecosystem, enhancing every stage of the software development lifecycle. Let’s break it down:

1. Development

It allows developers to create isolated environments that are identical to production.

- Example: A developer can work on a Python app in a container while another uses Node.js without conflicts.

2. Testing

Containers ensure that applications behave the same way in testing environments as in production.

- Benefit: Eliminates the “it works on my machine” problem.

3. Deployment

With this, deploying an application is as simple as shipping a container to production.

- Real-World Scenario: It’s A container running on your laptop that can be deployed to a Kubernetes cluster in the cloud without modification.

4. Operations

Operations teams is use to monitor and manage containers, ensuring high availability and optimal resource usage.

Key Features

1. Containerization

It’s core feature is containerization, which packages code, libraries, and dependencies into a single unit.

2. Hub

A centralized repository where Docker images can be stored and shared with the team or community.

3. Compose

A tool for defining and running multi-container applications, simplifying the setup of complex environments.

4. Lightweight Virtualization

Unlike traditional virtual machines, its containers use shared OS resources, making them faster and more efficient.

Benefits

- Consistency Across Environments: Ensures the application works the same in development, testing, and production.

- Faster Time-to-Market: Streamlines workflows, enabling faster releases.

- Improved Collaboration: Teams can share containerized environments, reducing misunderstandings.

- Cost-Effective: Optimizes resource usage by running multiple containers on a single machine.

Common Docker Commands

Here’s a cheat sheet for some essential commands:

| Command | Purpose |

|---|---|

docker build | Build a image from a Dockerfile. |

docker run | Run a container from a image. |

docker ps | List running containers. |

docker stop | Stop a running container. |

docker-compose up | Start services defined in a Compose file. |

docker logs | View logs from a container. |

Best Practices

- Use Trusted Images: Start with verified base images from Hub to ensure security.

- Keep Images Lightweight: Remove unnecessary dependencies to reduce image size.

- Automate Builds: Use CI/CD pipelines to automate image creation and testing.

- Monitor Containers: Use tools like Prometheus and Grafana to track container performance.

- Implement Security Measures: Regularly scan images for vulnerabilities using tools like Scan or Trivy.

Real-World Use Cases

Microservices

It excels in microservices architectures, where each service runs in its own container.

Hybrid Cloud Environments

Containers provide a flexible and consistent way to package applications, enabling them to move seamlessly between on-premises servers and various cloud platforms. Because containers encapsulate all the necessary components-code, runtime, system tools, and libraries, they ensure that an application behaves the same regardless of where it’s deployed. This portability reduces compatibility issues and simplifies migration or hybrid cloud strategies. Whether running in a private data center, a public cloud, or a combination of both, containers help organizations maintain operational consistency, accelerate deployment, and optimize resource utilization across diverse environments.

CI/CD Pipelines

It plays a crucial role in simplifying Continuous Integration and Continuous Deployment (CI/CD) pipelines by providing consistent, reproducible environments for building, testing, and deploying code. By containerizing applications and their dependencies, Docker eliminates environment-related issues that often cause build failures or deployment errors. This consistency ensures that code behaves the same way from a developer’s machine to staging and production servers. As a result, Docker streamlines automation, accelerates release cycles, reduces integration problems, and enhances overall reliability throughout the CI/CD process, making software delivery faster and more predictable.

Challenges

While Docker offers numerous advantages, it comes with challenges:

- Learning Curve: New users may find the ecosystem overwhelming.

- Security Risks: Misconfigured containers can expose vulnerabilities.

- Storage Overhead: Containers generate data that needs effective management.

These challenges can be addressed with proper training and best practices.

Docker is an indispensable tool in the DevOps toolkit, streamlining workflows and enhancing collaboration between teams. Its ability to create portable, lightweight, and consistent environments makes it a game-changer for modern software development.

By adopting Docker in your DevOps pipeline, you can accelerate delivery, improve resource efficiency, and ensure a seamless transition from development to production. Whether you’re a beginner or a seasoned professional, Docker’s versatility and power can transform the way you build and deploy applications.

Also Read:

- Spring AI in Java: Harnessing the Power of Artificial Intelligence

- Ironheart First Glimpse: About Marvel’s Genius New Hero 2025

- Final Destination: Bloodlines 2025 – The Grand Finale of a Legendary Horror Franchise

- PGA Championship 2025: Golf’s Premier Showdown at Quail Hollow

Web Stories: